I was recently asked for a point of view on organizational metrics for a new group of Agile Teams at a big software engineering shop. The conversation led to this quick essay to collect my thoughts. Big topic, short essay, so please forgive omissions.

Context

Business circumstances and organizational philosophy dramatically influence metric and measure choices. At one extreme frequent releases delivered when ready is the best business fit. On the other extreme infrequent, date-driven deliveries addressing external constraints are the appropriate, if uncomfortable fit. While they share the basics, the level of formality, depth, and areas of focus are different. Other considerations abound.

Another key dimension of metric selection is the type of work. When dealing with garden variety architectural and design questions, the work has a clear structure and common metrics will work well. However, when there are significant architectural and design unknowns, it is hard to predict how long the work will take; thus, common metrics and measures are not as helpful.

So for this essay, I am assuming:

- Multiple Agile teams with autonomy matched to competency, or put another way, as much as they can handle.

- Teams own their work end to end, from development through operations.

- There are inter-team functional and technical dependencies.

- Frequent releases are delivered when ready (I won’t go deep into forecast actuals, etc.).

- A traditional sprint-based delivery process (I am not saying that’s best, but it’s popular and easy to speak to in a general-purpose article).

Motivations, What Questions are we asking?

- Are we building the features our customers need, want, and use?

- Are we building technical and functional quality into our features?

- Given the business and market circumstances, is the organization appropriately reliable, scalable, sustainable, and fair?

- Is our delivery process healthy and are our people improving over time?

Principles

Single metric focus breeds myopia - avoid it: asking people to improve one measure will often cause that measure to appear to improve but at the cost of the overall system. This leads to…

Balance brings order: when you measure something you want to go up, also measure what you don’t want to go down. You want more features faster - what’s happened to quality? Are we courting burnout? It is easy to create a perverse incentive with a manager’s “helpful metric.”

Correlation is not causation: It is hard to “prove” things with data. Not because the data is scarce, though it often is, but because there are frequently many variables to consider. Understanding is a goal of measurement. Yet often the closer you look, the more variables you see and the less clear the relationships may become. So, be warned, if only “a” drives “b” it is likely you are missing “c” and “d,” etc. Be cautious.

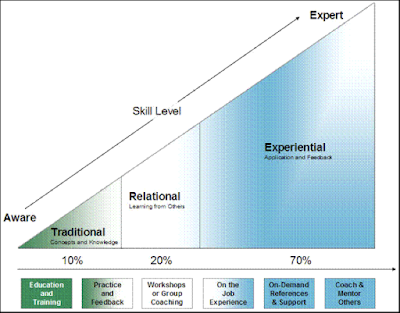

Competence deserves autonomy: a competent team should choose how to manage and solve their own problems, including what to measure. Since a manager has problems too, it’s the same for them. As long as the leader can explain why they need it and how it should help, they’re good. Force it without the team’s buy-in, it likely won’t work, anyway.

Wisdom is hard won: Metric targets are seductive. Having no targets comes across as having no goals. Aimlessness. But bad targets are pathological and that’s worse. A competent, productive developer who’s not improving is better than one held to myopic targets they neither want nor value. Unless your aim is a dramatic change, set targets relative to current performance, not an ideal.

If you demand dramatic change, you better know what you're doing. No pithy advice for that. Good luck.

Measures and Metrics

Based on the circumstances, the level of formality and utility will vary.

Customer Value Measures

- Feature use - does our customer use the feature we built?

- Net promoter score - do they love our features enough to recommend them to others?

Delivery (team level)

Sprint Metrics - simplistically said: Push work into a time box, set a sprint goal, commit to its completion, and use team velocity to estimate when they’ll be “done” with something. Most argue the commitment motivates the team. Some disagree.

Cumulative flow for Sprint-based work

- Velocity or points per sprint

- Should be stable, all else being equal. Sometimes all else is not equal, which doesn’t mean something needs “fixed,” but it might mean that. Sorry.

- Using Average Points Per Dev can reduce noise by normalizing for team size/capacity changes week to week.

- Story cycle time

- Time required to complete a development story in a sprint. When average cycle time exceeds 2/3’s of sprint duration, throughput typically goes down as carry over goes up.

- In general, analysis of cycle times through work steps across processes and teams can be very revealing (enables you to optimize the whole). But, that doesn’t just fall out of Cumulative Flow.

- Backlog depth

- Generally indicates functional or technical scope changes

- Doesn’t capture generalized complexity or skill variances that impact estimates

- Work in process per workflow step

- Shows when one step in the workflow is blocking work

Work allocation

When one team does it all, end to end, keeping track of where the time goes helps understand what you can commit to in a sprint. Also, products need love beyond making them bigger, so you need to make choices for that.

- % capacity for new features

- % capacity for bugs/errors

- % capacity for refactoring and developer creative/ learning time

- % production support

Functional Quality

- Bugs

- Number escaped to production - defects

- Number found in lower environment - errors

Technical Quality

- Code quality

- Unit test coverage %

- Rule violations - security, complexity (cognitive load)... long list from static analysis tools

- Readability - qualitative, but very important over time

- Lower environments (Dev, Test, CI tooling, etc.)

- Uptime/downtime

- Performance SLAs

Dependency awareness

Qualitative, exception-based. How well does the team collaborate with other teams and proactively identify and manage dependencies?

Team morale

Qualitative, could be a conversation, a survey, observation, etc.

Mean time to onboard new members / pick up old code

Most engineers don’t like to write documentation. Sure, “well-written code is self-documenting” but they also know better. A good test is how long it takes to onboard new members / pick up old code.

Estimating accuracy (forecast vs. actual variance)

- Top-down planning - annual or multi-year. Typically based on high-level relative sizing.

- Feature level - release or quarterly level. Typically, a mix of relative sizing at a medium level of detail.

- Task/story level - varies by team style. May be relative or absolute (task-based).

Cross-team metrics

- API Usability, qualitative - for teams offering APIs how much conversation is needed to consume the API

- Mean time to pick up another team’s code - documentation and readability

- Cross-team dependency violations

So, there you have it. Some thoughts on principles, motives, and metrics for software engineering. Comments welcome. Be well.